The Dawn of Computing: Early Processor Beginnings

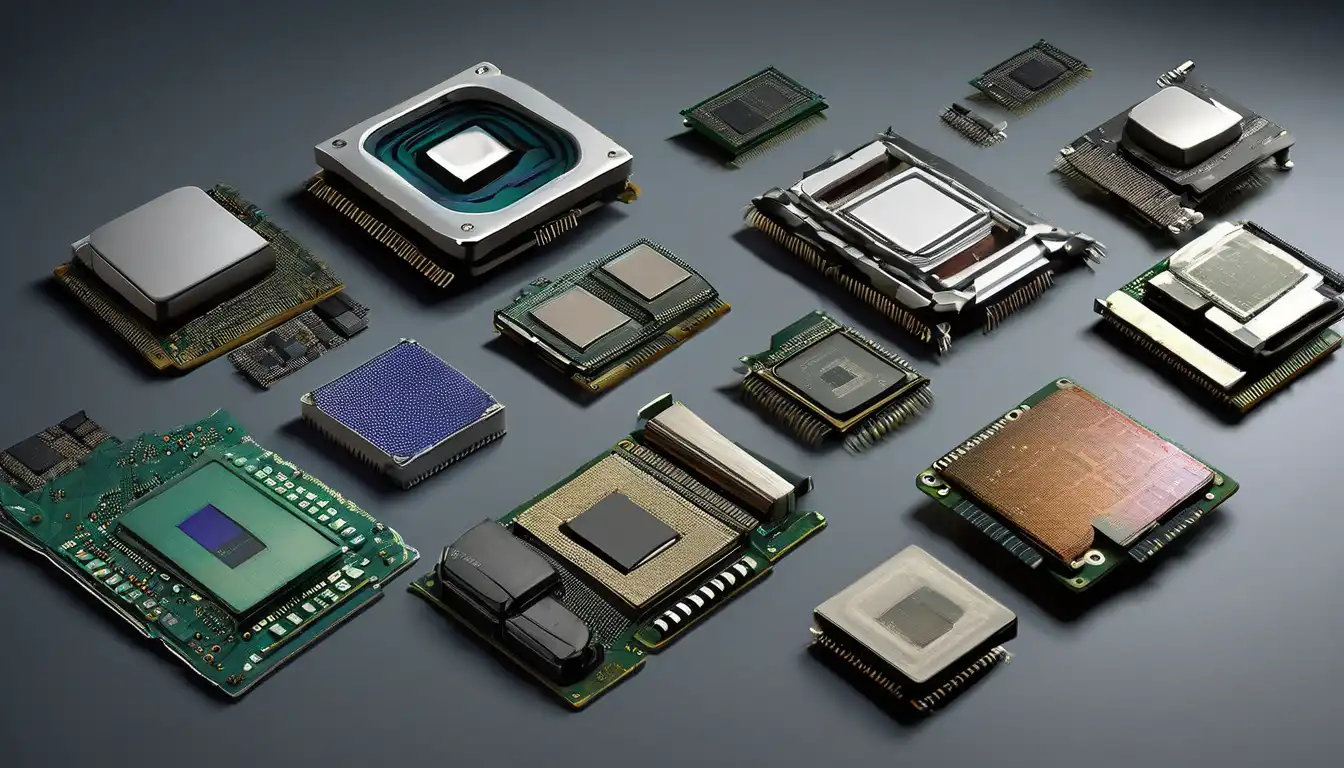

The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with massive vacuum tube systems that occupied entire rooms, processors have transformed into microscopic marvels capable of billions of calculations per second. This transformation didn't happen overnight but through decades of innovation, competition, and relentless pursuit of efficiency.

In the 1940s and 1950s, early computers like ENIAC used vacuum tubes as their primary processing components. These systems were enormous, consuming vast amounts of electricity and generating tremendous heat. A single processor of this era might contain thousands of vacuum tubes, each representing a basic switching element. The limitations were obvious: reliability issues, massive power requirements, and physical size constraints made these early processors impractical for widespread use.

The Transistor Revolution

The invention of the transistor in 1947 at Bell Labs marked the first major turning point in processor evolution. Transistors could perform the same functions as vacuum tubes but were smaller, more reliable, and consumed significantly less power. This breakthrough paved the way for the second generation of computers in the late 1950s and early 1960s.

IBM's 7000 series computers exemplified this transition, using transistors instead of vacuum tubes. These systems were still large by modern standards but represented a massive improvement in efficiency and reliability. The transistor's invention earned William Shockley, John Bardeen, and Walter Brattain the Nobel Prize in Physics and set the stage for even more dramatic advancements.

The Integrated Circuit Era

The next quantum leap came with the development of integrated circuits (ICs) in the late 1950s. Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor independently developed the first practical integrated circuits, which combined multiple transistors on a single semiconductor chip.

This innovation led to the third generation of computers in the 1960s. IBM's System/360, introduced in 1964, became the landmark system of this era. It featured modular design and used hybrid integrated circuits, representing a significant step toward the modern processor. The ability to pack more components into smaller spaces began what would later be known as Moore's Law.

Moore's Law and the Microprocessor

In 1965, Gordon Moore observed that the number of transistors on a chip was doubling approximately every two years. This observation, which became known as Moore's Law, has driven processor development for decades. The first true microprocessor, the Intel 4004, emerged in 1971. This 4-bit processor contained 2,300 transistors and operated at 740 kHz – modest by today's standards but revolutionary at the time.

The 4004 was followed by the 8-bit Intel 8008 in 1972 and the groundbreaking 8080 in 1974. These processors powered the first personal computers and marked the beginning of the microprocessor era that would transform computing from an enterprise-only technology to something accessible to individuals and small businesses.

The Personal Computer Revolution

The late 1970s and 1980s saw processors become the heart of the personal computer revolution. Intel's 8086 and 8088 processors, introduced in 1978 and 1979 respectively, became the foundation for IBM's Personal Computer in 1981. The x86 architecture born from these processors would dominate personal computing for decades to come.

Competition intensified during this period with companies like Motorola (with their 68000 series) and Zilog (with the Z80) challenging Intel's dominance. The 16-bit processors of this era offered significantly improved performance over their 8-bit predecessors, enabling more sophisticated software and graphical interfaces.

The 32-bit and RISC Revolutions

The 1980s also saw the emergence of 32-bit processors and Reduced Instruction Set Computing (RISC) architectures. Intel's 80386, introduced in 1985, brought true 32-bit processing to the x86 line, while companies like Sun Microsystems and MIPS developed RISC architectures that offered higher performance for specific applications.

RISC processors used simpler instructions that could be executed more quickly, contrasting with the Complex Instruction Set Computing (CISC) approach used by x86 processors. This architectural debate continues to influence processor design today, with modern processors often incorporating elements of both approaches.

The GHz Race and Multicore Evolution

The 1990s and early 2000s were characterized by the "megahertz race" as processor manufacturers competed to deliver ever-higher clock speeds. Intel's Pentium processors and AMD's competing offerings pushed clock speeds from tens of MHz to multiple GHz. However, this approach hit physical limitations around power consumption and heat generation.

The industry response was the shift to multicore processors. Instead of making single cores faster, manufacturers began placing multiple processor cores on a single chip. This approach, pioneered by IBM's POWER4 in 2001 and embraced by Intel and AMD shortly after, allowed continued performance improvements without corresponding increases in clock speed.

Specialization and Heterogeneous Computing

Modern processor evolution has moved toward specialization and heterogeneous computing. Today's processors often include not only multiple CPU cores but also integrated graphics processors (GPUs), AI accelerators, and specialized units for tasks like video encoding and cryptography.

This trend toward specialization reflects the diverse workloads of modern computing. From mobile devices that prioritize power efficiency to servers handling massive parallel workloads, processors have evolved to meet specific needs rather than pursuing one-size-fits-all solutions.

Current Trends and Future Directions

Today's processor evolution focuses on several key areas: artificial intelligence acceleration, quantum computing research, and continued improvements in energy efficiency. Companies like NVIDIA have demonstrated the power of specialized AI processors, while research institutions worldwide are making progress toward practical quantum computers.

The end of traditional Moore's Law scaling has led to innovations in 3D chip stacking, new materials like gallium nitride, and architectural improvements that extract more performance from each transistor. The future likely holds even more specialized processors tailored to specific applications, from autonomous vehicles to edge computing devices.

The Impact on Society

The evolution of processors has fundamentally transformed society. From enabling the internet revolution to powering smartphones that put supercomputers in our pockets, processor advancements have driven technological progress across every sector. The continuous improvement in processing power has made possible applications that were science fiction just decades ago.

As we look to the future, the evolution of processors will continue to shape our world. Whether through quantum computing breakthroughs or more efficient traditional processors, the journey that began with vacuum tubes continues to accelerate, promising even more remarkable transformations in the decades to come.

The story of processor evolution is far from over. Each generation builds upon the last, creating a technological legacy that continues to push the boundaries of what's possible in computing. For more insights into computing history, explore our article on the development of personal computers or learn about emerging computing technologies that will shape tomorrow's processors.